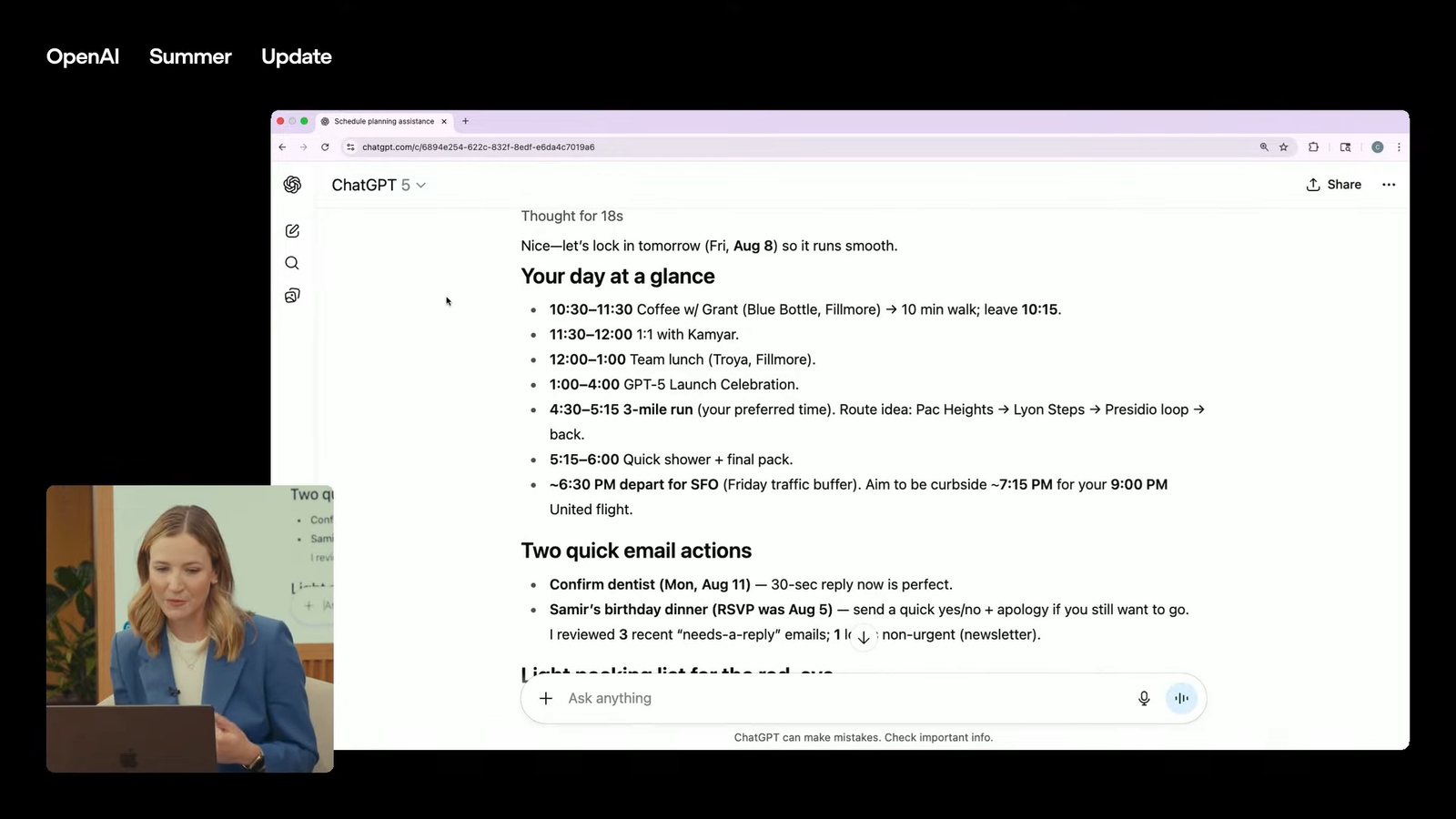

Leveraging emerging technologies like artificial intelligence and quantum information sciences into safeguarding existing digital networks and amplifying workflows can increase both benefits and risks to agencies, government experts say.

“AI and quantum are double-edged sword here,” Kevin Walsh, director of Information Technology and Cybersecurity at the Government Accountability Office, said. “It’s a tool. This is not some magic thing.”

Leaders working across federal agencies and laboratories weighed in on the benefits and drawbacks of implementing emergent technologies into government systems during a Thursday ATARC panel discussion.

Walsh said that the fundamental legacy systems present in government operations is a hurdle both for incorporating new technologies, such as quantum cryptography, and maintaining adequate security protocols.

“Going forward: If you’re building a system now, you should be thinking about the encryption algorithms that you’re using that are going to, you know, help protect you in 10 years,” Walsh said. He added that the advent of a cryptographically-relevant quantum computer will also challenge what current government systems are capable of doing.

“These are the kind of forward-thinking things that we need to be thinking about, as well as how to secure our own existing systems,” Walsh said. “There will be many systems that are not able to implement quantum: period.”

Ensuring government software and hardware is up to par is critical for defending against the “harvest now, decrypt later” strategy attackers are anticipated to use to collect and store data for later decryption efforts when a fault-tolerant quantum computer becomes available.

Incorporating AI into these networks is also challenging, despite its relatively advanced development. Tameika Turner, the senior cybersecurity program manager at the National Nuclear Security Administration, said that the work needed to safely marry existing security programs like Secure By Design with new tech is underlooked.

“With AI being out, we are kind of trying to catch up from a cyber perspective. We’ve got to put all the frameworks out,” Turner said. “Everyone’s trying to add AI functionality onto the tools that are already existing, which kind of gets in the way of Secure by Design, because these systems already exist. Some are legacy, and they’re like, ‘Well, I just want to turn on AI, it shouldn’t be a problem right?’”

Turner noted the need for increased oversight into data management when allowing AI software access to existing data reserves. She explained that the centralization of how agencies store data to effectively train large language models for AI tools can pose both risks and benefits. Particular risks include reducing the surface area potential attackers need to cover to access important data, while increased centralization can simultaneously offer faster breach detection.

“We have to make a determination on what type of data we want to actually input into these large language models, and are we okay with that?” she said. “There is great opportunity there, especially when you start talking about incident response and those sort of things. But there’s also great risk when you start to centralize some of that data into one solution.”

For Walsh, the solution to the benefit-risk tradeoff is proper assessment of whether or not an AI or future quantum information system solution is necessary for a given operation.

Both he and Turner emphasized the importance of ongoing vigilance and maintenance of security protocols as the best offense when safeguarding government digital assets.

“The things that we have been preaching all along: having multifactor authentication, keeping your software up to date, having offline backups, strong passwords, monitoring your network…I believe those are the kinds of things that even in the face of huge amounts of brute force attacks, those are still going to serve you well,” Walsh said.

“Don’t treat AI as magic,” Turner said. “Treat it as an untrusted component.”