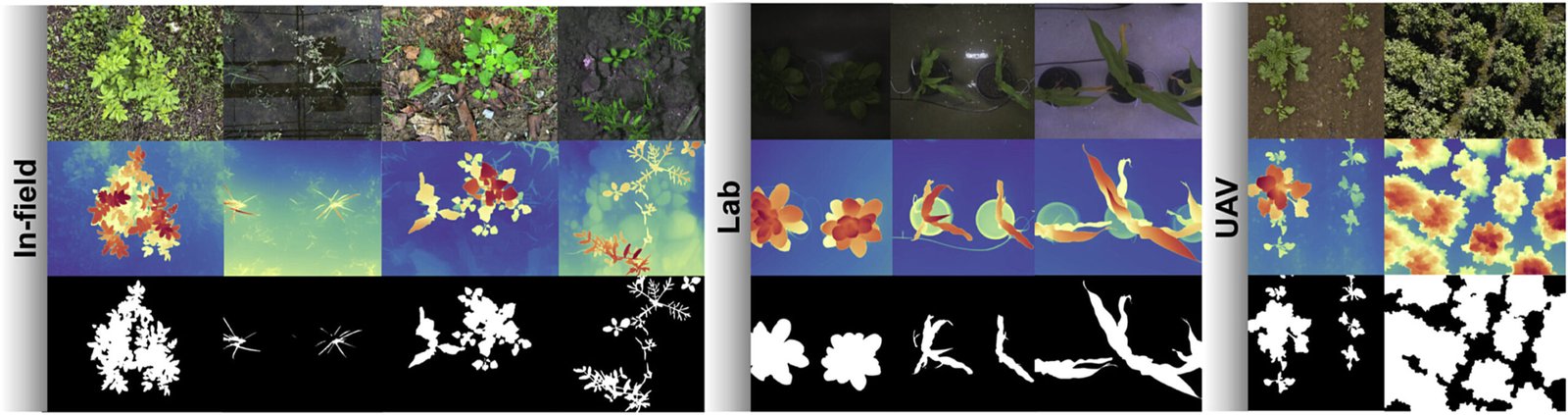

By leveraging a vision foundation model called Depth Anything V2, the method can accurately segment crops across diverse environments—field, lab, and aerial—reducing both time and cost in agricultural data preparation.

Crop segmentation, the process of identifying crop regions in images, is fundamental to agricultural monitoring tasks such as yield prediction, pest detection, and growth assessment. Traditional methods relied on color-based cues, which struggled under varying lighting and complex backgrounds.

Deep learning has revolutionized this field, but it requires large, meticulously labeled datasets—an expensive and labor-intensive bottleneck. Previous efforts to reduce labeling costs using weak or self-supervised learning have faced trade-offs in performance or required complex setups. The advent of foundation models now offers a new paradigm.

A study published in Plant Phenomics by Hao Lu’s team, Huazhong University of Science and Technology, reduces labor and cost in high-throughput plant phenotyping and precision agriculture.

To evaluate the effectiveness of DepthCropSeg, the researchers implemented a comprehensive experimental protocol involving four mainstream semantic segmentation models—U-Net, DeepLabV3+, SegFormer, and Mask2Former—as baselines, alongside two category-agnostic models, SAM and HQ-SAM. These models were trained using both coarse and fine sets of pseudo-labeled crop images generated by Depth Anything V2, and their performance was benchmarked against fully supervised models trained on manually annotated data.

Evaluation was conducted using mean Intersection over Union (mIoU) across ten public crop datasets. SegFormer, the best-performing model, was further enhanced through a two-stage self-training procedure and depth-informed post-processing.

The results demonstrated that DepthCropSeg, with its custom pseudo-labeling and self-training strategy, achieved segmentation performance nearly equivalent to fully supervised models (87.23 vs. 87.10 mIoU), outperforming simpler depth-based methods (e.g., Depth-OTSU, Depth-GHT) by over 10 mIoU and foundation models SAM and HQ-SAM by about 20 mIoU.

Ablation studies confirmed the significant contributions of manual screening, self-training, and depth-guided filtering—each improving segmentation accuracy by 2–5 mIoU. Post-processing with depth-guided joint bilateral filtering notably reduced visual noise while being robust to parameter changes.

Qualitative results on self-collected images further illustrated DepthCropSeg’s superior boundary delineation over other models. However, the method showed limitations in handling full-canopy images due to an underrepresentation of such cases in the training set. The analysis revealed a bias in manual screening, favoring sparse-canopy images, though this can be corrected by supplementing more full-coverage examples.

Overall, the study validated that high-quality pseudo masks, when combined with minimal manual screening and depth-informed techniques, can achieve near-supervised crop segmentation performance, introducing a scalable and cost-effective solution for agricultural image analysis.

DepthCropSeg introduces a transformative learning paradigm that sidesteps the need for exhaustive manual labeling. By combining foundation model capabilities with minimal human screening, it achieves segmentation accuracy rivaling traditional methods—paving the way for faster, cheaper, and more scalable agricultural AI applications.

More information:

Songliang Cao et al, The blessing of Depth Anything: An almost unsupervised approach to crop segmentation with depth-informed pseudo labeling, Plant Phenomics (2025). DOI: 10.1016/j.plaphe.2025.100005

Provided by

Chinese Academy of Sciences

Citation:

Vision model brings almost unsupervised crop segmentation to the field (2025, August 8)

retrieved 8 August 2025

from https://phys.org/news/2025-08-vision-unsupervised-crop-segmentation-field.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.