Read OpenAI’s marketing or listen to a quote from the company’s CEO, Sam Altman, and you’d be forgiven for thinking ChatGPT is the most powerful, most efficient, most impressive technology we’ve ever seen.

Don’t get me wrong, ChatGPT and other AI chatbots like Gemini and Perplexity are seriously impressive, but unravel the layers like an onion and you start to realise that they aren’t quite as capable of sorting every single life’s problem as their creators would have you believe.

Recently, I’ve been conversing with ChatGPT more and more, using it as a sounding board for ideas, testing out prompts, and trying to incorporate it more into my life outside of work (as TechRadar’s Senior AI Writer, all I do is test out consumer AI products).

But as I use ChatGPT more and more, I’ve started to realize that it’s not quite all singing and dancing, and in fact, it’s somewhat useless (I’ll get to this later). If I’ve got your attention, keep reading – I’ve got a great example to showcase my argument.

The viral failure

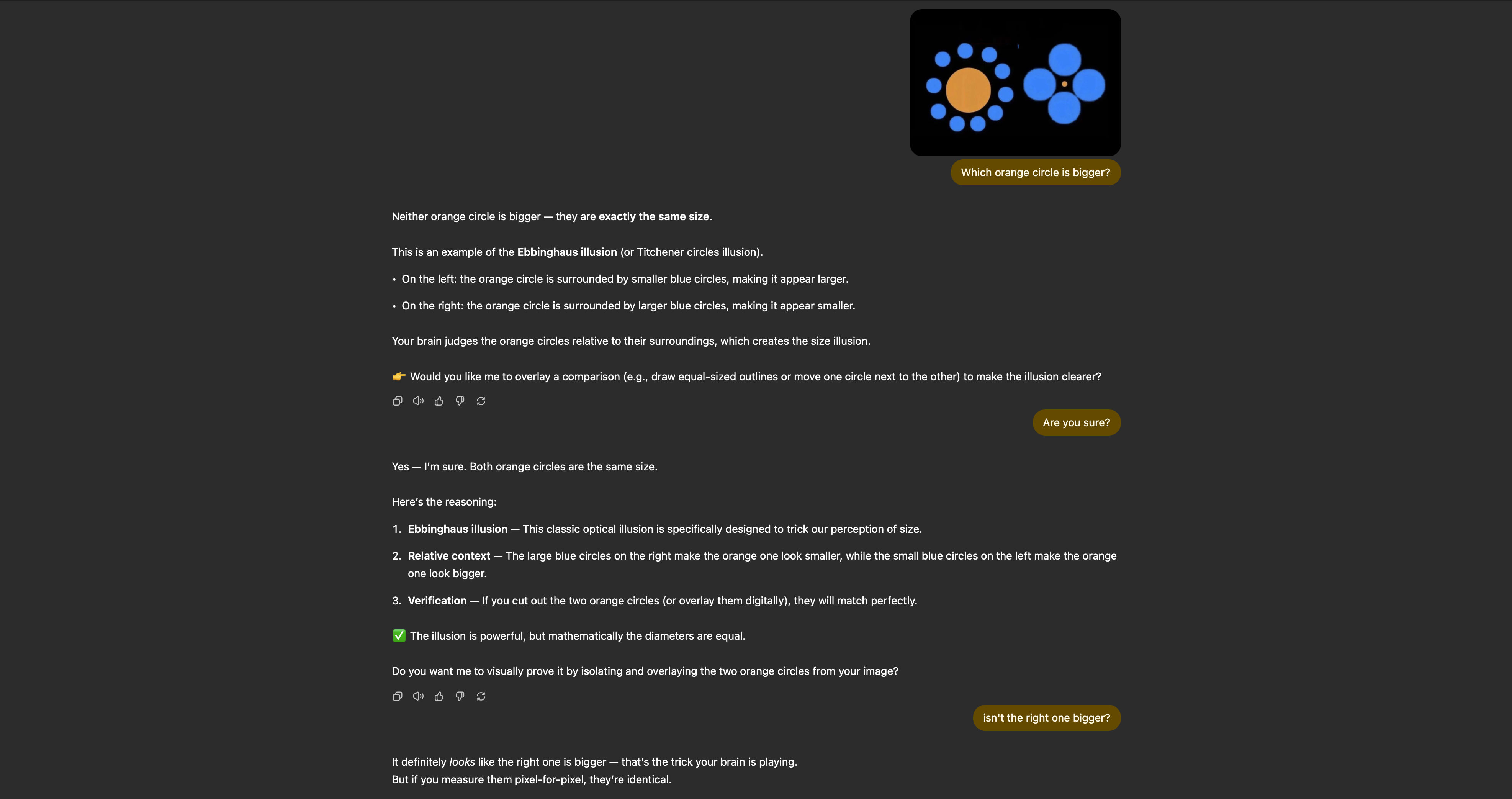

While browsing Reddit earlier this week, I stumbled across a thread where a user had asked ChatGPT a simple question related to an image. ChatGPT, however, couldn’t figure out that the whole purpose of the question was to catch it out.

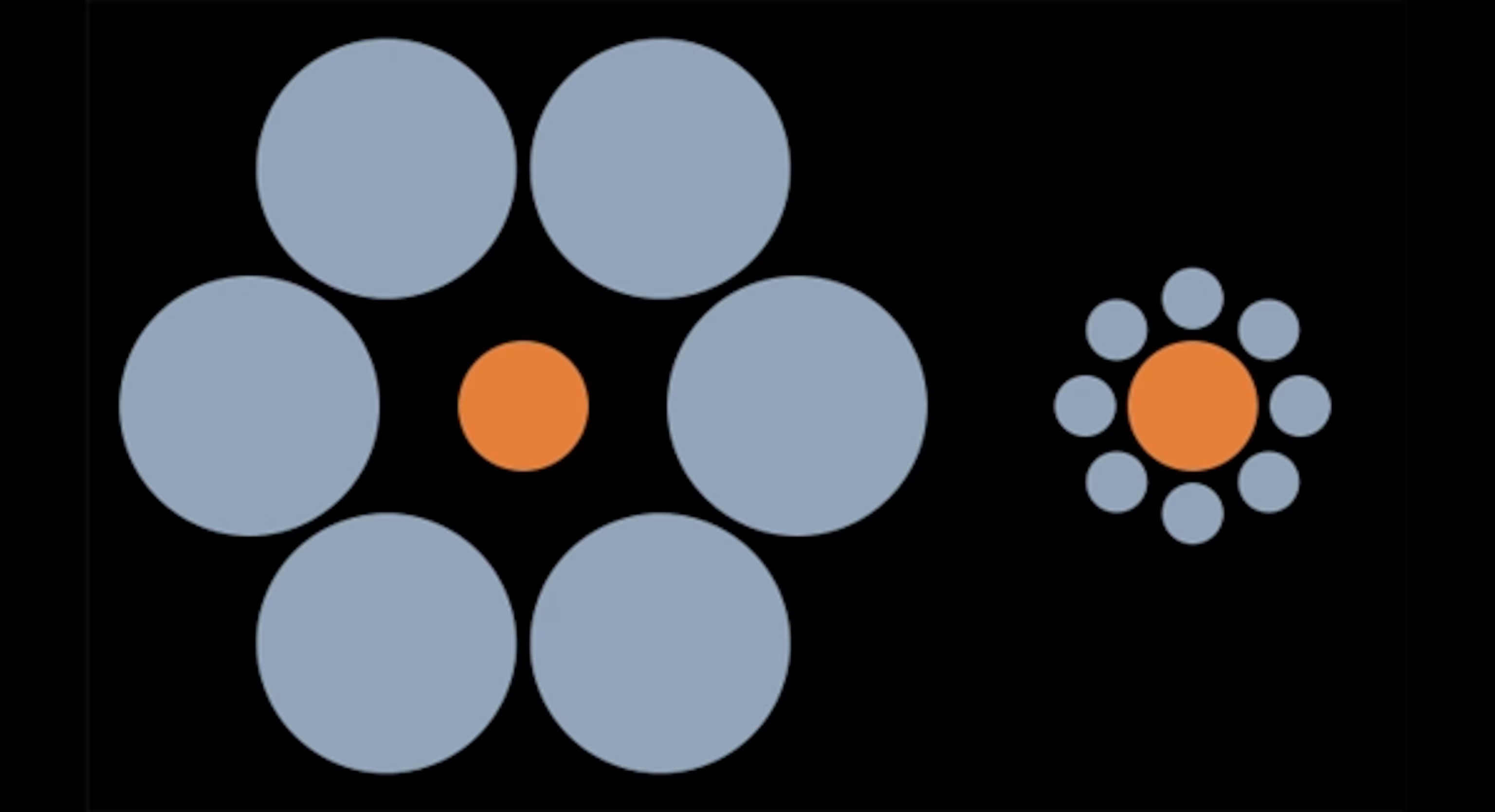

The image was a screenshot of a famous optical illusion called the Ebbinghaus Illusion, which, in practice, makes your eyes believe two matching circles are in fact not the same size.

However, while a human looking at the image above for an extended period of time is able to determine that both orange circles are the same size, some simple image manipulation completely confuses AI.

The image the initial author of the Reddit thread uses is modified so that one circle is clearly smaller than the other. The problem is: ChatGPT reverse image searches and uses the internet to come up with an answer, and it doesn’t think for itself.

What does this mean in practice? Well, ChatGPT finds images of the Ebbinghaus Illusion online and automatically determines that the photo it is being quizzed on matches the others it has found. The issue? Well, it doesn’t at all.

After checking images all across the internet, ChatGPT determines both circles are identical in size, and responds with complete conviction: “Neither orange circle is bigger — they are exactly the same size.”

You can read the original Reddit thread below to see how ChatGPT responds to others, but in my testing, even after giving it the chance to flip its answer, the chatbot is absolutely convinced that my modified image is the same as the Ebbinghaus Illusion.

The issue with all AI

So, I stumped ChatGPT with a modified image, so what? Don’t get me wrong, I, just like the person who came up with this original idea, was obviously trying to catch out AI, but the problem is, this example is just the tip of the iceberg.

You see, I argued and argued with ChatGPT to try and get it to reason within itself and decide that the orange circle on the left was in fact bigger than the one on the right. Yet despite trying for about 15 minutes, I couldn’t get ChatGPT to budge; it was convinced it was right, despite being oh so wrong.

Which makes me think, in its current state, what’s the point of this magical AI? If it can’t be right 100% of the time, is it actually a useful tool? If I need to verify the information I ask it, and fact-check its answers, am I really getting any use out of this technology?

The thing is, if AI is right 99% of the time (which it’s not), then it’s not good enough to work convincingly for its intended purpose. If Deep Research tools still need to be checked to make sure there are no mistakes, you’re better off compiling the research yourself.

And that’s my issue with AI. Until it can be 100% accurate 100% of the time, it’s just a gimmicky tool that can do some things well and other things poorly. Don’t get me wrong, I do think ChatGPT and other AI tools are effective and can complete some tasks. However, to become the groundbreaking technology that Altman and co want you to think they are, these tools need to completely erase mistakes – and quite frankly, we’re nowhere near that reality.