A computer that uses light rather than digital switches for calculations could help reduce the energy demands of artificial intelligence (AI), according to a new study. The scientists who invented the computer describe it as a new computing paradigm.

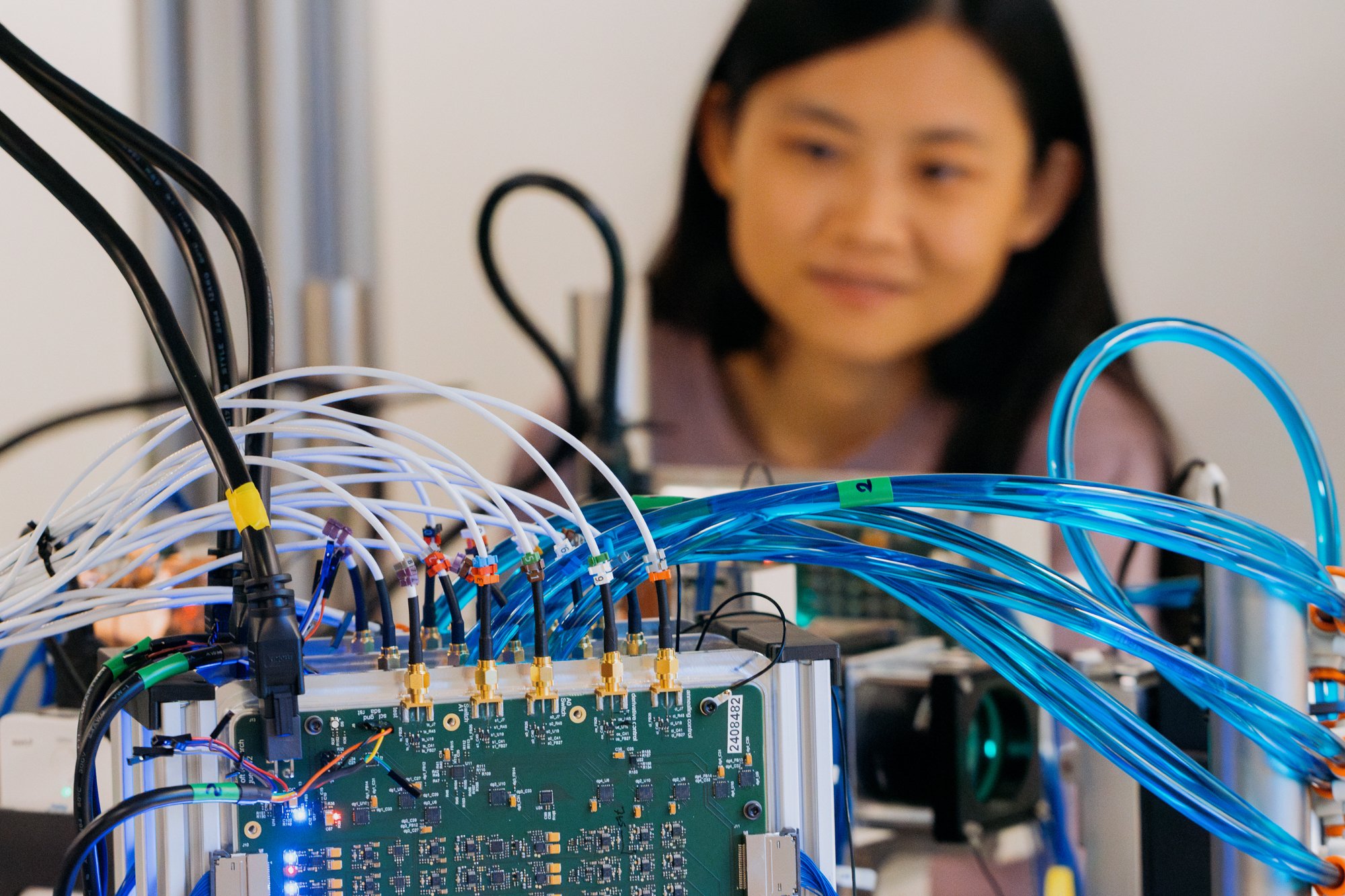

Microsoft researchers developed a prototype analog optical computer (AOC) that can perform some tasks that an AI might be used for, as well as optimization problems.

The new computing system could one day solve certain problems faster and with less energy than modern digital computers are capable of, the scientists wrote in the study, published Sept. 3 in the journal Nature.

“The most important aspect the AOC delivers is that we estimate around a hundred times improvement in energy efficiency,” study co-author Jannes Gladrow, an AI researcher at Microsoft, said in a Microsoft blog post. “That alone is unheard of in hardware.”

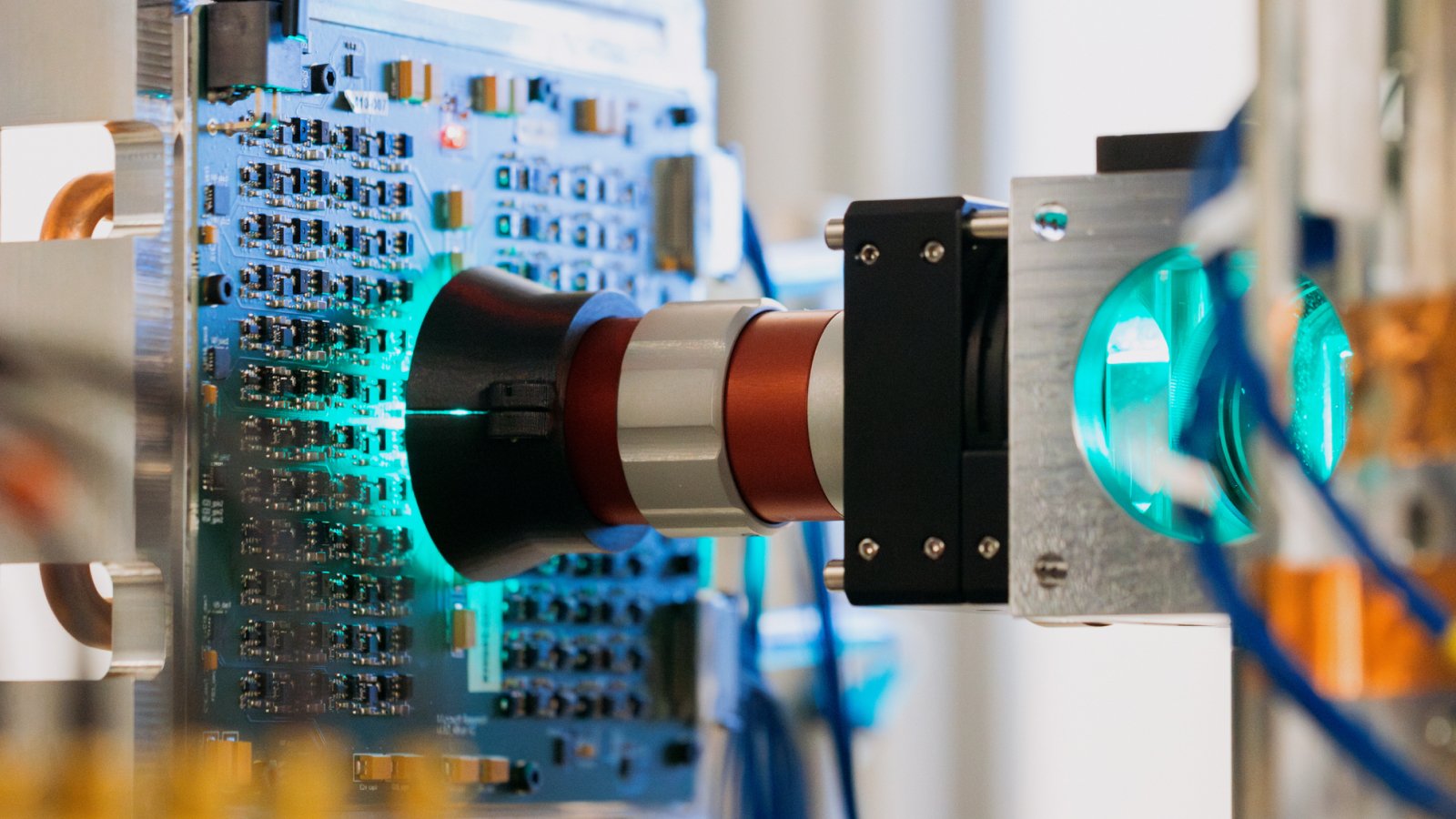

In the new study, Microsoft researchers built a computer that uses micro-LEDs and camera sensors to perform calculations.

Unlike regular digital computers, which flip billions of tiny switches to perform calculations, the new system uses light and voltage of different intensities to add and multiply numbers in a feedback loop. The AOC computes a problem many times, each time improving on the last, until it reaches a “steady state,” or final solution.

Related: ‘Quantum AI’ algorithms already outpace the fastest supercomputers, study says

Because the AOC doesn’t convert the analog signals to digital ones during the calculations, the AOC saves energy and overcomes some of the speed limitations inherent in digital computing.

This specialized computation method “makes it a special-purpose “steady-state finder” for certain AI and optimization problems, not a general-purpose computer,” Aydogan Ozcan, an optical computing researcher at UCLA who was not involved in the research, told Live Science in an email.

But for those specific purposes, the AOC could offer significant improvements over digital computing, the researchers wrote in the study.

A new, light-based computing paradigm

The team also programmed a “digital twin” — a computer model that mimics the physical AOC’s computations. This digital twin can be scaled up to handle more variables and more complex calculations.

“The digital twin is where we can work on larger problems than the instrument itself can tackle right now,” Michael Hansen, senior director of biomedical signal processing at Microsoft Health Futures, said in the blog post.

The team first had the AOC run some simple machine learning tasks, such as classifying images. The physical AOC performed about as well as a digital computer. A future, larger AOC that can handle more variables could quickly outclass a digital computer in energy efficiency, the team wrote.

Then, the researchers used the AOC digital twin to reconstruct a 320-by-320-pixel brain scan image using just 62.5% of the original data. The digital twin accurately reproduced the scan — a feat the scientists say could lead to shorter MRI times.

Finally, the team used the AOC to solve a series of financial problems that involved finding the most efficient way to exchange funds between multiple groups while minimizing risk — a challenge that clearinghouses face daily — with a higher success rate than existing quantum computers.

For now, the AOC is a prototype. But as future models add more micro-LEDs, the machines could become much more powerful, computing with millions or billions of variables at a time.

“Our goal, our long-term vision is this being a significant part of the future of computing,” Hitesh Ballani, a researcher in Microsoft’s Cloud Systems Futures team, said in the blog post.